Every once in a while, I find a need to do something a bit off the wall. Recently, I had another one of those situations.

I’ve spent a lot of time working with some of the old arcade emulators that are floating around (the most famous of which is MAME, or Multi Arcade Machine Emulator).

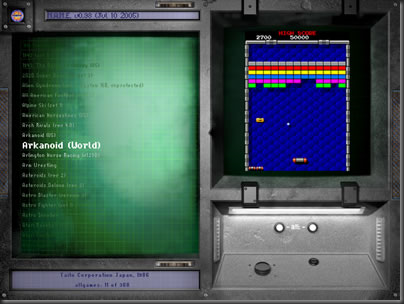

Mame itself is pretty utilitarian, so there are a number of front ends that are essentially menuing systems to provide a user with an easy to browse interface for selecting games to play and among the more popular front ends is MaLa.

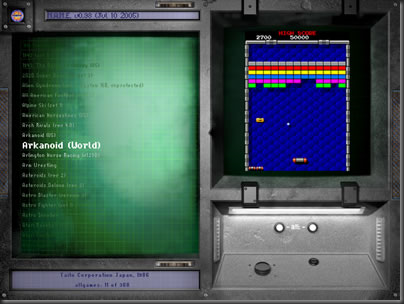

MaLa Main screen (using one of many available skins) showing list of games, and a screenshot of the selected game

One nice aspect of MaLa is that it supports plugins, and there are a number of them out there, to control LED lights, play speech, etc.

I had had a few ideas about possible MaLa plugins for awhile, but the MaLa plugin architecture centers around creating a standard Win32 DLL with old fashioned C styled Entrypoints, and, well, I kinda like working in VB.net these days.

Gone Hunting

Eventually, curiousity got the better of me, and I started looking for ways to expose standard DLL entry points from a .net assembly. I ended up finded Sevin’s CodeProject entry called ExportDLL that allowed just that. Essentially, it works by:

- You add a reference in your project to a DLL he created, that only contains a single Attribute for marking the functions you want to export.

- Create the functions you want to export as shared functions in a MODULE

- You mark those functions with the Attribute

- You compile your DLL

- You then run ExportDLL against your freshly compiled DLL

- ExportDLL then decompiles your DLL into IL, tweaks it, and recompiles the IL code back into a DLL

It sounds complicated but it’s really not.

I set it all up and had things working in about 30 minutes.

Gone South

Unfortunately, all was not quite right. MaLa requires 2 entry points (among a host of them) defined with a single integer argument passed on the stack. Pretty simple stuff. So I coded up:

<ExportDLL("MaLaOrientationSwitch", CallingConvention.Cdecl)> _

Public Shared Sub EntryPoint_MaLaOrientationSwitch(ByVal Orientation As Integer)

But when I ran the DLL within MaLa, it crashed immediately after calling this function, even with NO CODE in the function itself.

What this meant is that something about the export process was trashing the stack. I spent a solid day hunting for clues as to what might be failing. I did find that eliminating the argument from the exposed entrypoint allowed MaLa to work properly AND call my entrypoint, but, being unable to get the passed Orientation value, the call was basically useless.

Gone Around Again

In digging through it all, I happened to notice a comment on the CodeProject page for Sevin’s article pointing to a similar library by Robert Giesecke. I’m not sure if the two were developed independently or not, but Robert’s is certainly a more polished set of deliverables. He even went so far as to put together a C# project template that makes it ridiculously easy to kick off a project using his technique.

It turns out, not only is Robert’s approach cleaner, it actually properly exports the MaLaOrientationSwitch function above with no problems. MaLa can call it, pass in the argument and all is good.

Gone Fishing

One big difference between the two techniques is the Robert actually defines an MSBuild targets file to patch his DLL directy into the Visual Studio build process. Very cool! But, his build step happens AFTER the PostBuildEvent target, and it was in that target that I’d setup some commands to copy the DLL into a file called *.MPLUGIN, which is what MaLa specifically looks for. Hooking that process into the build itself makes debugging things quite natural, but, Mr. Giesecke’s target wasn’t allowing for that.

Here’s Robert’s targets file:

<Project

xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<UsingTask TaskName="RGiesecke.DllExport.MSBuild.DllExportTask"

AssemblyFile="RGiesecke.DllExport.MSBuild.dll"/>

<Target Name="AfterBuild"

DependsOnTargets="GetFrameworkPaths"

>

<DllExportTask Platform="$(Platform)"

PlatformTarget="$(PlatformTarget)"

CpuType="$(CpuType)"

EmitDebugSymbols="$(DebugSymbols)"

DllExportAttributeAssemblyName="$(DllExportAttributeAssemblyName)"

DllExportAttributeFullName="$(DllExportAttributeFullName)"

Timeout="$(DllExportTimeout)"

KeyContainer="$(KeyContainerName)$(AssemblyKeyContainerName)"

KeyFile="$(KeyOriginatorFile)"

ProjectDirectory="$(MSBuildProjectDirectory)"

InputFileName="$(TargetPath)"

FrameworkPath="$(TargetedFrameworkDir);$(TargetFrameworkDirectory)"

LibToolPath="$(DevEnvDir)\..\..\VC\bin"

LibToolDllPath="$(DevEnvDir)"

SdkPath="$(FrameworkSDKDir)"/>

</Target>

</Project>

I’d worked with MSBuild scripts before, so I knew what it was capable of, I just couldn’t remember the exact syntax. A few google searches jogged my memory, and I ended up here at a great post describing exactly how you can precisely inject your own targets before or after certain other predefined targets.

I modified Robert’s targets file and came up with this:

<Project

xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<UsingTask TaskName="RGiesecke.DllExport.MSBuild.DllExportTask"

AssemblyFile="RGiesecke.DllExport.MSBuild.dll"/>

<!-- Add to the PostBuildEventDependsOn group to force the ExportDLLPoints

target to run BEFORE any post build steps (cause it really should) -->

<PropertyGroup>

<PostBuildEventDependsOn>

$(PostBuildEventDependsOn);

ExportDLLPoints

</PostBuildEventDependsOn>

</PropertyGroup>

<Target Name="ExportDLLPoints"

DependsOnTargets="GetFrameworkPaths"

>

<DllExportTask Platform="$(Platform)"

PlatformTarget="$(PlatformTarget)"

CpuType="$(CpuType)"

EmitDebugSymbols="$(DebugSymbols)"

DllExportAttributeAssemblyName="$(DllExportAttributeAssemblyName)"

DllExportAttributeFullName="$(DllExportAttributeFullName)"

Timeout="$(DllExportTimeout)"

KeyContainer="$(KeyContainerName)$(AssemblyKeyContainerName)"

KeyFile="$(KeyOriginatorFile)"

ProjectDirectory="$(MSBuildProjectDirectory)"

InputFileName="$(TargetPath)"

FrameworkPath="$(TargetedFrameworkDir);$(TargetFrameworkDirectory)"

LibToolPath="$(DevEnvDir)\..\..\VC\bin"

LibToolDllPath="$(DevEnvDir)"

SdkPath="$(FrameworkSDKDir)"/>

</Target>

</Project>

Now, I can perform the compile, and execute my postbuild event to copy the DLL over to the MaLa Plugins folder and give it the requisite MPLUGIN name, all completely automatically.

And, the icing on the cake is that I can build a MaLa plugin completely in VB.net, with no C or C# forwarding wrapper layer and with a fantastic XCOPY-able single DLL application footprint (save for the .net runtime, of course<g>).

It’s a wonderful thing.